Deploy django application with private dependencies on dotcloud

Update: This is an outdated entry. DotCloud went to cloudControl, which joined ExoScale

These days I'm trying to deploy an application on a something quicker than my shared host (dreamhost.com). Not that there's anything wrong with this provider (this blog is on it), but the latency is a bit too much (2 seconds is too much for a first-time visitor to wait). I tried to play with heroku (mainly because everyone in the cloud hosting world mentions it...), but I have a feeling that to do some decent testing I'll exceed 10K rows quite quickly.

I've looked for other providers, based on some suggestions (note: some of the solutions mentioned in these posts are no longer viable :( ). So, after looking around, I've figured out that, at least initially, dotcloud will do.

The app's requirements (for testing) are:

- SQL database - just because the support for NoSQL would introduce yet another dependency

- Ability to have an arbitrary number of rows, for data processing (just in case)

- Ability to drop in all kind of apps

- All the help I can get in deployment - although I have experience in development and enterprise deployments, cloud (and particularly things like heroku or dotcloud) are an entirely different animal

- Ability to deploy an app with private dependencies - most dependencies I have are OSS, but I have some logic developed in house, stored on a local network ;)

- Multiple environments - I guess no one does hot bootstrapping of development code in production...

DotCloud Django Environment

I'm using a mac for the time being and I'm implicitly familiar with terminal commands and so on. Dotcloud installs a python-based command named dotcloud and available via pi.

Additionally to the CLI, there are a couple of tutorials that help you create your fist django app. They work out of the box if you don't change anything (just do copy-paste of their content).

There are several things I've noticed while doing my first django deployment:

- The DB (postgres in my case, as per tutorial) has a name "db". You can change it. If you do change it, you'll have to change the environment variables in your

settings.py - The DB takes some moments to become live when you first initialise it. Until then, you'll get 'connection refused' messages It's free after all so I can't complain :)

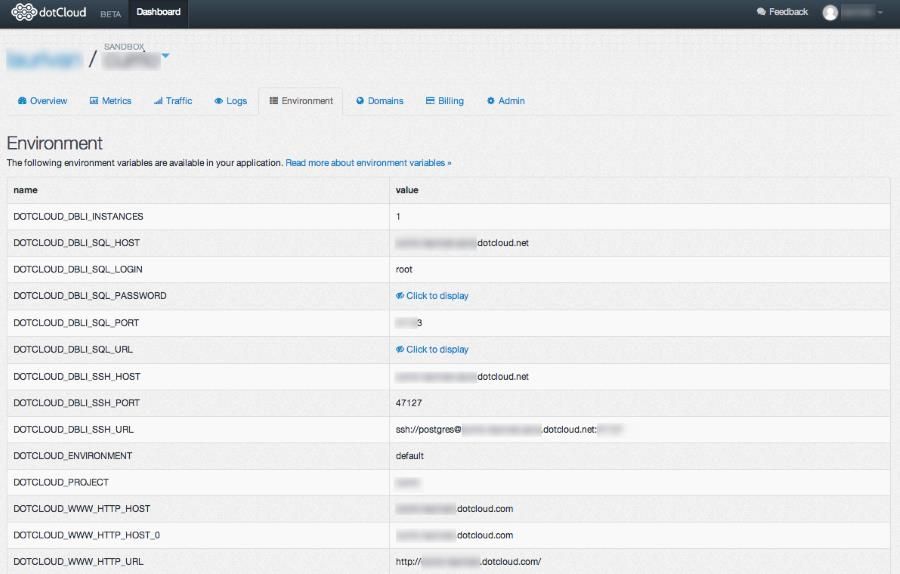

- There's an Environment page in your dashboard. It's very useful as the DB details for example are set there. For a django app, this is good practice (you could have the same variables set on your dev machine and you would not need to change anything).

The secrecy

In an ideal world, we'd all do open source software and build our apps on it. Everyone with an idea would just need to pick up things and build the glue and inexistent bits to create their app. Unfortunately, these days you need to guard your IP. As a result, some of the code is on private repository, with no access to the outside world. Hence the problem: I need somehow to make the code available to dotcloud.

Initially, I thought stuff works like a fabfile (or ant, or maven): most things happen on your machine and then the build is pushed. Unfortunately, NO. All happens on the remote box. This makes more sense, because you'd need that the destination box gets the .py files and generates the .pyc files out, not your box. Unfortunately (again), there's no way that a random amazon cloud box would know about your server.localdomain private repository. The conclusion is that you need a way to make that code available. The methods I could find so far included:

- Fabfiles: you'd do stuff locally and then push it to dotcloud - this defeats the purpose of having docloud (I can do this already for my host)

- Setting up private distribution repositories like with heroku's gemfury. I guess I could have this feature posted to dotcloud, but there's yet another dependency...

- Setting up vendor dependencies

As it seems obvious, the way to go are these vendor dependencies...

Django vendor dependencies

Setting up vendor dependencies is something I do for enterprise software: you go get 3rd party libraries, fix the versions and build your product on those versions. You don't go bleeding edge, don't change versions... Boring right? But you get the idea.

Unfortunately, it seems that the consensus around the community is that you need to do vendor dependencies. I've looked around for ways to do it, and.. there are some options:

- Do a tarball

- Integrate the code into your own tree

Building the tarball is not too feasible as it would need to be pushed to the dotcloud machine and processed.

The only remaining way is to integrate the private code into your deployment code in some shape or form. Initially, I thought for a couple of seconds to merge everything in one blob, like Pinax, but then I remembered the pain of isolating a Pinax app once because I only needed that one. NO :(. There's also the versioning problem: I have a bit of code I fixed in the "production" (bundled) variant, then I have to push it into the original dependency. Overkill!

Fortunately, git (my poison of choice here) has the concept of submodule. Since both the module and submodules are on a machine capable of accessing the local repository, cloning the app would result in cloned submodules too. Then, dotcloud doesn't use git commands to push the code on their machine, it uses rsync. As a result, all dependencies are pushed onto the server. There are some limitations/caveats to this solution:

- The dependencies are hard dependencies now; you don't have them in the requirements.txt file. You'll need to manage the dependencies via git branches. Maybe later on, when I can afford it, I can invest in a github account.

- I can't have binaries pushed around (haven't tried to create a vendor dependency on something like PIL)

- Your source code increases. Then again, it's your source code. Otherwise you wouldn't have it private :)

HOW TO

The steps to push your full blown app are simple:

-

First, you check out your main repository. For example you can clone dotcloud's django app repo. If you do, remember to push it back to your development repository :)

-

Then you create a "vendor" directory in your repository.

-

For each private dependency, you need to add it as a submodule:

git submodule add [email protected]:my_dependency.git vendor/django_companyNow you have all your dependencies' sources

-

Now you have two options:

- Add the explicit paths to your python path, so the dependencies can be found or

- Build the dependencies remotely.

The second option can be easier to achieve via the postinstall script, which is readily available in the example.